What are Historical Place Names?

Historical Place Names (HPN) are not contemporary place names. Rather, they are place names which existed in history and are mentioned in different historical sources. For instance, Camelot or Atlantis. These two places appear in historical written texts, historical maps (cartographic information) and visual items (iconographical information depicted in landscape paintings, town panoramas, etc.) but never in reality. However, a Historical Place Name can also very well be a place that has actually existed or still exists but under a different name. In other words a Historical Place Name is a place appellation, which could refer to several places, and its application may have changed over time (e.g. Colonia 52 AD and Köln 2015 AD.This concept of place name is described in the CIDOC-CRM data model as E48. Place name). It may also refer to time as a historical identifier which may discern several places (e.g. medieval and contemporary Vilnius as different polygons, the concept is described in CIDOC-CRM as E42. Period) and (or) as a kind of immovable heritage as “non-material products of our minds” (e. g. linguistic origin and analysis of HPN for understanding lost native cultures.The concept is described under E28 Conceptual Object in CIDOC-CRM).

What are the problems with HPN?

During digitisation, when one transcodes reality from analogue to digital, Historical Place Names are used as identifiers for a certain historical space. HPN becomes a link between past reality and contemporary virtuality, ensuring quality of digitisation, interoperability of reality and virtuality, internal interoperability of data within the information system and external interoperability of several systems, as well as efficient communication of digital data.

However, it is also important to take into account the “human factor” of HPN. We call this “historical or cultural multilingualism”, meaning terminological differences within common language. This is determined by the cultural differences of various nations (e. g. Greece in English and Ελλάδα in Greek). On the other hand, understandings of the historical space are marked through nationalistic historical narratives, thus having a huge impact on history and social geography (e. g. differences between German Königsberg and Russian Калининград).

Computing technology provides the possibility to maximise the objectivity for geographical representations in reality (e.g. using GIS data). Paradoxically, during the digitisation of cultural heritage, we link it less to modern geographical space realities than to the historical spaces of the 19>th century that were marked by historical narrative myths. For example, during March 2015 on Europeana.eu there were only 8,731 objects linked to the place named Gdansk, however the Danzig name had 34,449 objects associated with it.

What is the LoCloud HPN microservice?

The LoCloud HPN microservice, developed by Vilnius University Faculty of Communication in collaboration with LoCloud partners, is a semi-automatic historical geo-information management tool and web service. It enables local cultural institutions to collaborate on crowdsourcing a thesaurus of HPNs, enriching metadata about cultural object with HPN references, and re-using historical geo-information across applications.

HPN microservices are oriented towards providers and aggregators rather than end-users. They perform the following functions:

- Visualisation, crowdsourcing and enrichment of providers’ and aggregators’ historical geo-data via

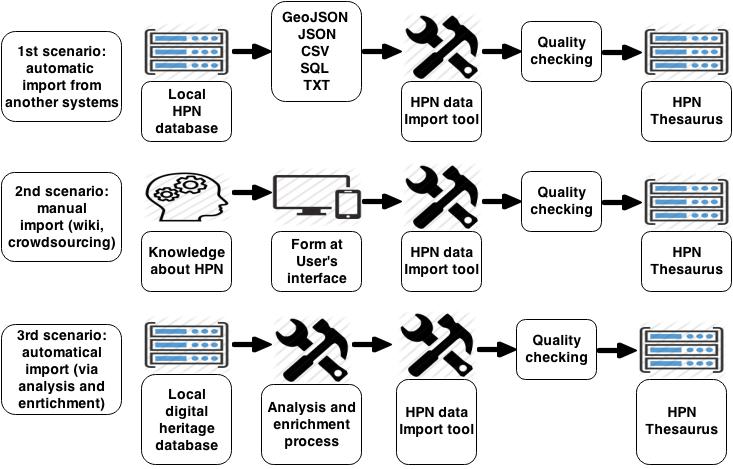

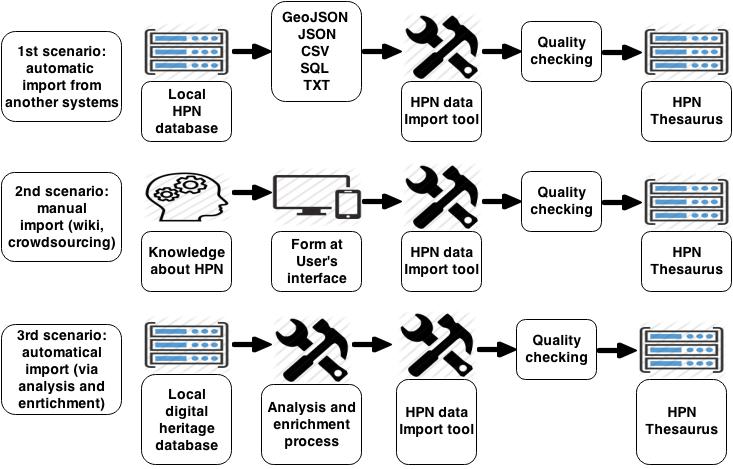

- automatic transfer of historical geo-data from local/international databases and information systems to the HPN Thesaurus. The system can map and transfer historic geo-data from local systems to the LoCloud HPN Thesaurus, importing geo-data inGeoJSON,JSON, CSV,SQL, TXT formats and matching it with historical geo-data already in the HPN Thesaurus using an automatic data import tool.

- manual provision of historical geo-data via a dedicated user interface.

The HPN enrichment scenarios are presented below:

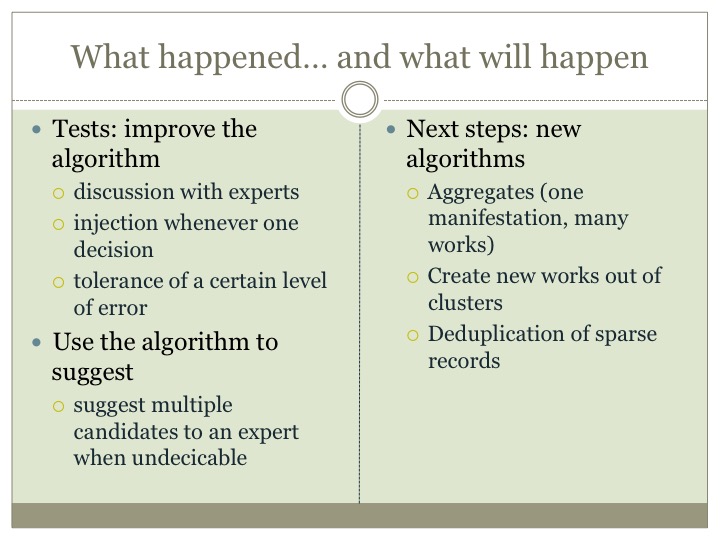

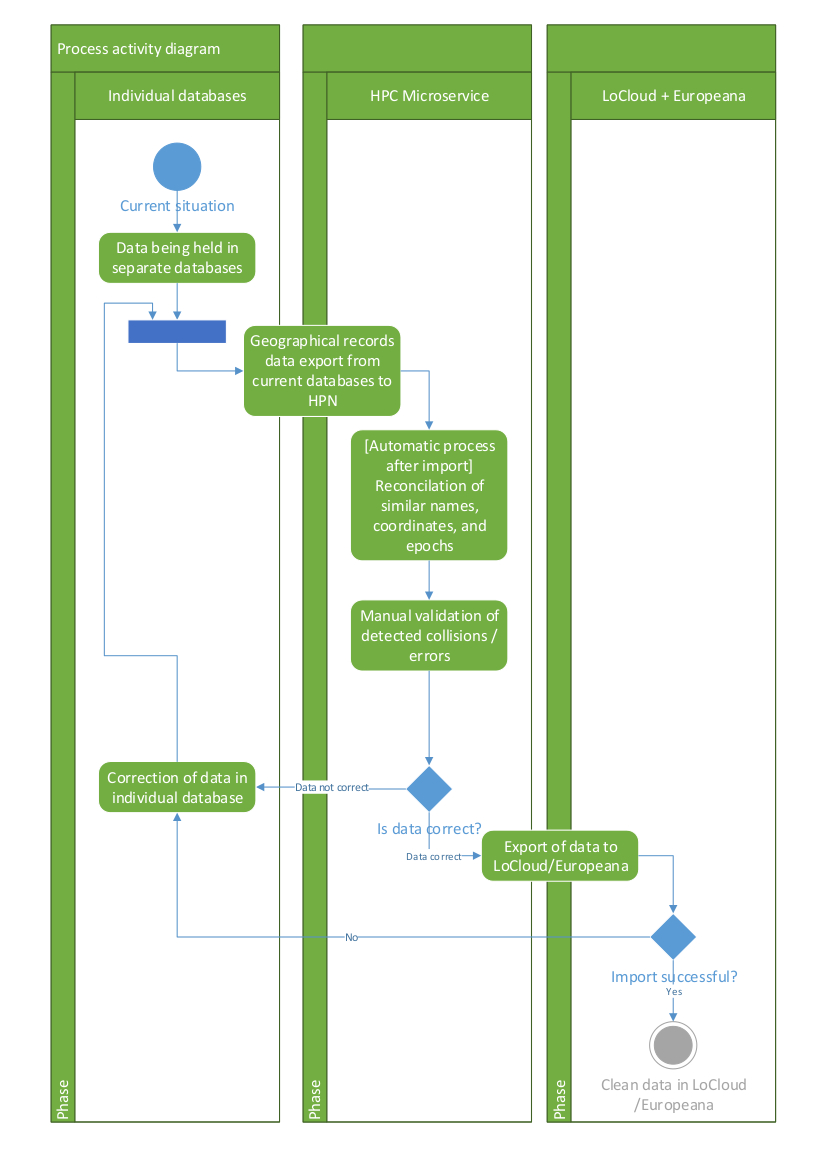

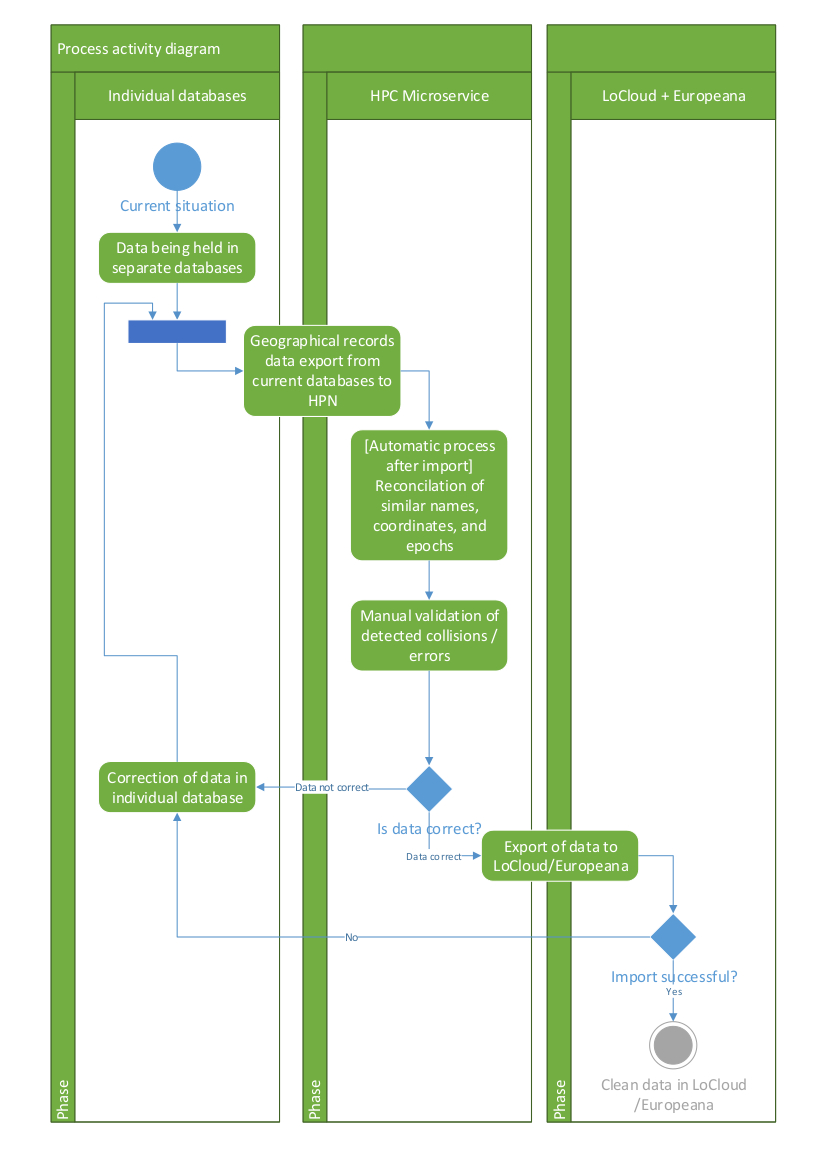

2. HPN interoperability provision is achieved via automatically checking the transferred data and linking local HPN with contemporary geographical names during the metadata harvesting process. It connects various forms (historical, linguistic, writing system) of historic place names in local systems with contemporary place names and GIS data as latitude and longitude. Geonames are stored in the HPN Thesaurus (which connects historic name with current name and/or administrative dependences, or connects different variations of the same place name, etc.). This is based on an integrated algorithm that rationalises and reconciles similar place names, by estimating similarities between names and geographic coordinates. The algorithm also performs an accuracy check (e.g. if a name and relevant coordinates are exact, it is ranked at 100%; if the name is exact, but the coordinates deviate by 50%, it would be 75%; if the name is not exact and the coordinates do not match the allowed deviation, it will be 0%). The whole process of analysis and enrichment is presented below:

More technical documentation about the HPN microservice can be found at http://tautosaka.llti.lt/en/unitedgeo and http://support.locloud.eu/tiki-index.php?page=HomePage.

How could the LoCloud HPN microservice be used?

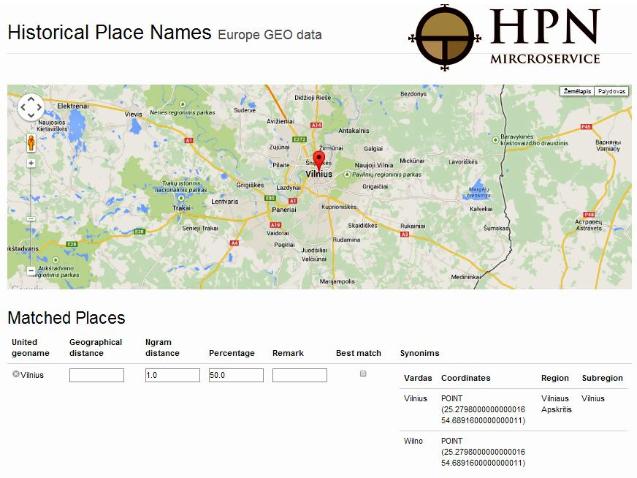

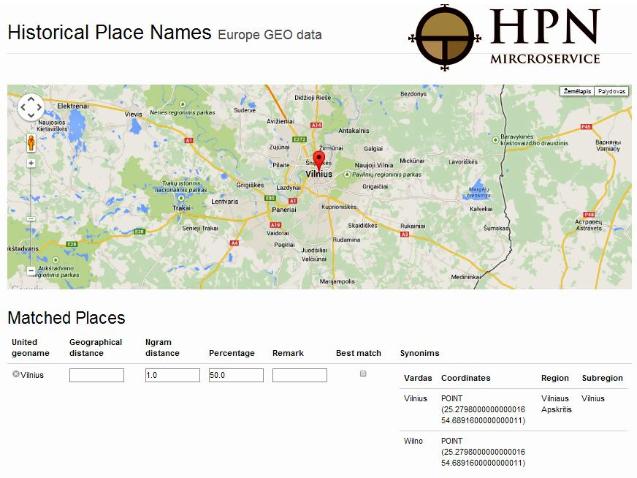

The LoCloud HPN microservice could be used by local history researchers (as unregistered users) to verify the availability of a Historical Place Name in the LoCloud HPN Thesaurus or to get information about the interconnection of HPN with contemporary place names, linguistic variations and geographical coordinates of place names. The service can also support the crowdsourcing of new Historic Place Names to augment the LoCloud HPN Thesaurus. A visual example of HPN in the microservice is presented below:

In a more controlled setting, the service can also be used by heritage database administrators (as registered users) in order to:

a. export selected LoCloud HPN Thesaurus datasets and make them available for enriching the other applications (for instance local systems);

b. interconnect contemporary place names, or add different historical and linguistic forms as well as geographical coordinate for place names in the system;

c. collaborate in developing the LoCloud HPN Thesaurus by contacting us and sending local data to be integrated as a batch by the service.