EDM turns five, so now what?

Impressions from the workshop

The Europeana Data Model (EDM) recently turned five! To celebrate it, Europeana organised a special workshop just before the Europeana General Assembly, gathering 30 experts that have significantly contributed to the development of the model. Here, we run through the different discussions, and highlight the main takeaway points.

In a stunning vaulted room in the Rijksmuseum, Antoine Isaac and Valentine Charles from the Europeana Foundation opened the workshop by first presenting the achievements of EDM and some short-terms plans for the coming months.

Over the past five years the EDM has been consolidated and enriched with the extensions and refinements that support requirements not addressed in the original release. The community around EDM has played a key role in the definition of these new requirements and extensions. Europeana’s future EDM extensions will touch upon annotations, finer representation of rights and user-created sets among other areas (refer to the presentations for more details).

EDM is also an enabler for data quality, which is another area where we will concentrate our efforts by, for instance, improving data validation.

Lastly Europeana will pursue its standardization activities by voicing the requirements of the cultural heritage sector in other communities and continuing its work creating inventories for linked and open vocabularies and EDM extensions.

Our experts welcomed all these achievements but also brought new challenges to the table. It was these that were discussed and explored during the daylong workshop.

-

How does Europeana decide what is implemented in EDM? For instance, why is the Event entity still not implemented?

-

Why does Europeana not take user needs into account in the development of the model?

-

What are the boundaries of EDM, and should it be a standard for the whole cultural heritage sector or should it be only for Europeana?

Two lightning talks from Lizzy Jongma, Rijksmuseum and Marko Knepper from University Library J.C. Senckenberg Frankfurt am Main raised some further questions about the role of EDM in the Europeana Collections portal[1] and the best practices for developing EDM extensions[2].

The group selected a series of topics that were then discussed during two roundtable sessions. The following report summarises the discussions that took place for each roundtable. It captures the diversity of the topics that were discussed during this half-day workshop.

What is the end goal of the Europeana Data Model?

The first area of discussion actually raised the bigger picture for the Europeana Data Model. What is the end goal of this data modeling endeavour? And is there only one?

There is a general acknowledgement that EDM should have more than one fixed end-goal. EDM is here to serve the Europeana community, but that community is bigger than the sum of all projects that are currently a part of it. There have been talks about, for example, using EDM to support local aggregation projects – a specific suggestion was made for a cluster of cultural collections in Amsterdam. This requires a lot of flexibility, however, and a governance model that keeps everyone involved with a say in the development and maintenance of the model.

This means that the community should learn to be comfortable with different implementations of EDM being made. This becomes particularly true if EDM is employed beyond the core Europeana data aggregation scenario. It should be then accepted that Europeana does not implement all of it. Europeana must be what its Network Association member want it to be, but at a central level, it cannot take into account all requirements of all domains. While Europeana helps connect objects, it should not seek to be a complete centralized repository.

Participants reached a common agreement that a key ‘core’ EDM implementation could be kept as a reference or standard for the community. Europeana will maintain this core but it should not be responsible for creating and maintaining extensions. Some modelling aspects can be easily ‘delegated’ to third-party profiles/extensions.

“There are subsets of EDM with local solutions or work-arounds (...) I would not go for many local solutions (...) more costly than [a] global solution. In this group there is enough brain power to solve this !"

As a result, from the perspective of governance, the participants suggested that Europeana should be predominantly concerned with validating the quality of the EDM core and leave the rest up to third parties, not seeking to enforce strict control on how extensions are created. This doesn’t mean to say that no validation should happen: coordination will be necessary so that the community builds a consistent data ecosystem. In particular, EDM extensions need to be mappable to the core. This will require guidance from Europeana, especially for recommending processes and methods for creating extensions that respect the EDM core and follow best practices for data modelling. Next to this, Europeana should provide tools that allow the community to properly keep the third-party owned extensions synchronized with the core.

A key part of successfully building such an interoperable yet varied ecosystem of cultural data is keeping a clear connection between usage and application. In the workshop there was a lot of discussion on whether EDM is for creating, sharing or presenting the data, or a mixture of all of these. Who is the user of EDM? Probably not the creators of original metadata, who have their own models and should not have to follow up with the changing implementations of EDM. The most natural users, then, are metadata experts who sit at the intermediate level of data transformation, and who ideally seek to minimize the loss of information in their data exchange projects. Furthermore, there were discussions about the possibility for extensions of EDM to enhance display and discoverability. The audience agreed that the purpose of EDM is to aggregate and map data, not to provide a consumer format. There was also consensus that EDM is not for non-specialists, nor non-CH people. EDM should focus on interoperability in the CH sector. Note that other vocabularies, such as Schema.org are emerging as means to reach data consumers outside CH sector. EDM should ease interoperability with them, to allow cultural data to be exchanged in a wider context. Moreover, plans for EDM extensions should go hand in hand with implementation and enforcement efforts. To take one example, EDM features potentially important to the portal were discussed, such as events and multiple proxies (see below). But without implementation (and this includes some measure of display of EDM elements), new extensions such as these will flounder.

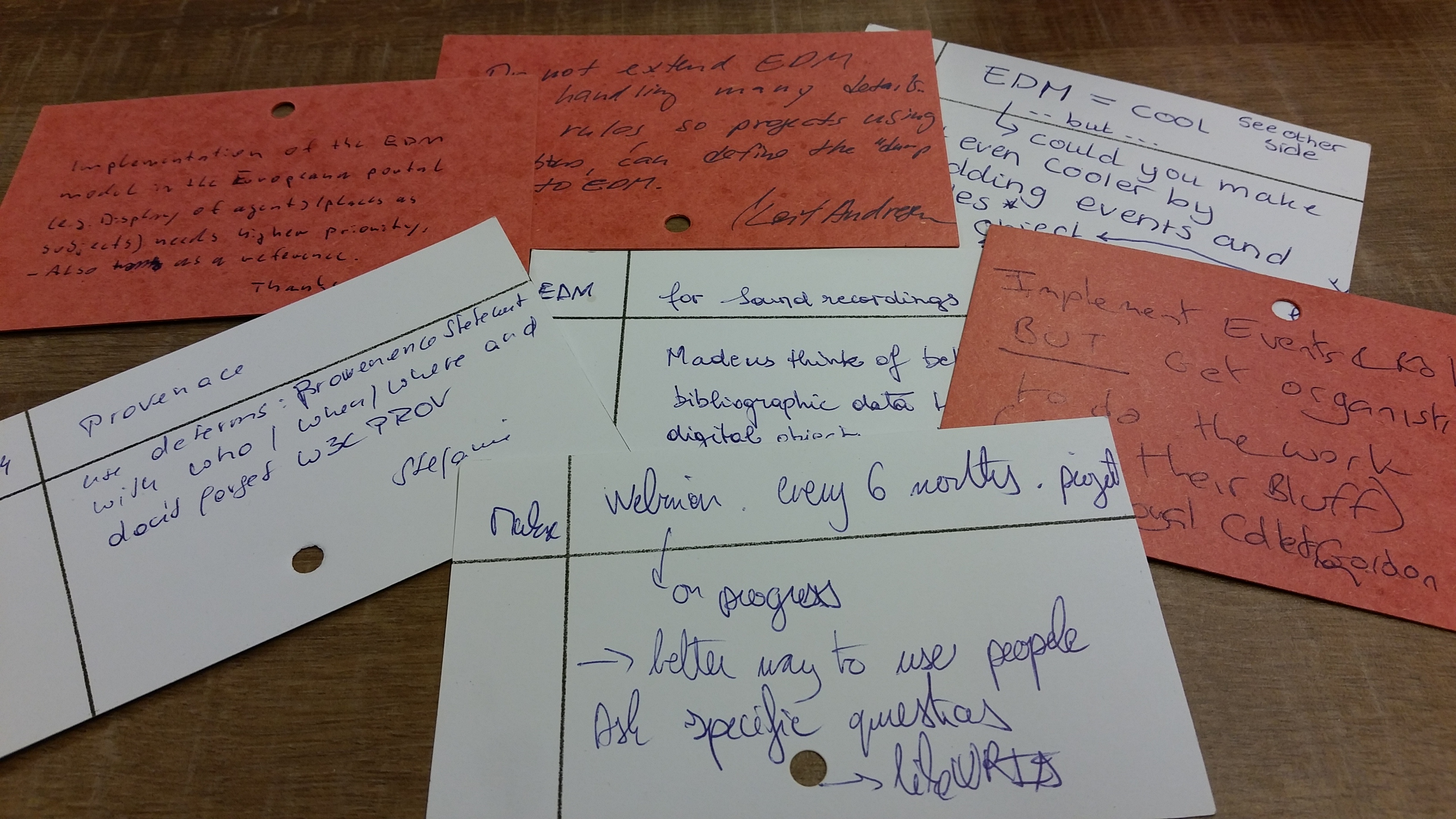

“Implementation of the EDM model in the Europeana portal (e.g. display of agents, places as subjects) needs higher priority (...) Thanks. Marko"

A simple model for complex data

EDM has evolved a lot over the five past years as it now encompasses more data requirements. From the original set of properties of the Europeana Semantic Elements (ESE), EDM has grown into a network that some participants have found “too complex”. The basic concept of EDM is to provide a core set of classes and properties on top of which extensions can be developed to address new requirements. However the workshop participants complained about the dissolution of the simple core into a very complex structure.

“EDM=Kafka"

The participants asked for further clarification on the Europeana strategy for developing EDM, connecting with the general governance discussion above: who is EDM for? How should it evolve and how controlled this evolution should be ?

It was emphasised that the initial approach taken for developing EDM remains the most appropriate: EDM should continue to build existing ontologies that are then organised around the existing EDM core. This core should remain modular so that it can support both the general requirements as well as the most specific ones without hindering the interoperability of data.

As explained in the White Paper Enhancing the Europeana Data Model, this modular approach is the one we have chosen for developing the model. However, it is true that the balance between keeping a low threshold for data contribution as well as handling more complex requirements is difficult to achieve. One participant, for instance, raised a concern about Europeana’s future work with content hosting, as such a service would increase requirements for EDM. Relying on external standards was mentioned as a way for Europeana to compensate its complexity. While the EDM core would remain simple, extensions based on external standards would help with managing the complexity of some requirements, such as delegating vocabulary representation to the SKOS community or the complexity of annotation modeling to the Web Annotation community. Europeana’s main role would be to make sure the requirements from the CH community would be heard in these different communities as described in the White Paper.

“EDM provenance use dcterms:provenance Statement with who, when, where (...) don't forget W3C Provenance model. Stefanie"

The question of Europeana’ s role in the development of EDM was the topic of many discussions during the workshop. EDM has been primarily developed for Europeana in order to enable interoperability within its services. But because of the fruitful community work around it, it has quickly evolved into a model including requirements from the whole CH domain. While the participants agreed on the fact that the involvement of the community is key to keep the dynamic, Europeana should keep things under a certain degree of control. But how much control? While some participants argued that Europeana should focus on its requirements, others expect Europeana to address the needs of its community. These expectations from Europeana have sometimes caused frustration, and the participants agreed that even if Europeana keeps addressing its requirements as well as the ones from the community, we should make more explicit what will or won’t be integrated in the model.

“Today, as in previous years (since 2008 events in Leuven, etc...) the issues of events, roles and metadata without data were raised. When asked why they weren't implemented yet, Europeana mentioned how difficult it was, that there were other priorities etc! Europeana had better clarify its strategy about its development priorities (...) Feedback is critical!” Michael Fingerhut”

Some proposed that Europeana could work in the same way as other standards: rules should be defined for developing EDM extensions and then extensions should be reviewed with a committee that can assess what is integrated in the model or not. A review process would help with controlling the level of complexity of some extensions (as experts, we always tend to make things more complex than there are!) while keeping in mind the users’ needs.

“A webinar every 6 months to update on progress. Better way to use people (...). Makx”

Other participants recognised that our community spend lot of time on modelling questions without having the necessary use cases. On one hand, it is difficult to develop a model without knowing the users’ needs and on the other hand, a model based only on specific requirements is not sustainable in the long term. One participant suggested that Europeana could attract more researchers to work on the most complex part of the model and on some new uses cases. The review process would then help deciding which parts will integrate the EDM core.

“Do not extend EDM into handling many details... Make rules so projects using extensions can define the "dumb down" to EDM.” Leif Andersen

EDM and data quality: the ongoing debate

Enhancing the quality of metadata exchanged in the Europeana Network has been EDM’s overall objective since the beginning. Yet, as years pass, Europeana and its partners still keep fighting the same fight - making cases, showcasing best practices, developing new hooks in the model. How can EDM be turned into a more efficient, workable means to support and quantify data quality improvements?

The very first question - what, truly, is metadata quality in EDM? - was actually quite radical. It depends on what the metadata expressed in EDM stands for: making multiple sources work together, representing different expertises and cultures. An interoperability solution designed with flexibility as key requirement, EDM has constructs that can be interpreted in many ways. Like other interoperability formats like LIDO, EDM is a pivot standard, which does not solve the issue that objects themselves are heterogeneous. It can also accommodate both simple and complex data (see above). In many aspects, it could be seen as a ‘distributed’ model, and this is generally regarded as a good principle to adhere too (cf. the discussion on EDM’s end goal above). So EDM is permissive, but can its use be coordinated so that it leads data providers to exchange richer data more naturally?

The first observation, which is not really new, is that, while there are too many cases of poor source data, many data quality issues come at the stage where the source data is mapped to EDM. Mappings should be handled by metadata experts, with a focus on the quality and value of the services provided on top of the data being exchanged, and how it could be perceived by Europeana’s stakeholders, notably end-users, data re-users, or the European Commission itself.

For this, providers and aggregators should be more empowered to evaluate and quantify the quality of their data for the entire ecosystem. Formal validation can be improved, the data checks Europeana and its partners perform at different stages of the aggregation process making more precise.

Recent R&D developments on RDF validation standards (e.g., RDF Shapes as implemented by SHACL) and working fine-grained validation tools (e.g., RDFUnit) deserve more investigation.

In particular, softer approaches to data checking could be designed. These would not necessarily focus on binary valid/invalid judgments, but rather on warning or outputting measures that show when the tested data fails to meet good standards for re-usability by a given user community, or only partially meets the requirements of a specific application.

Crowdsourcing feedback on quality has also been suggested as an option to get a better grounding of what users generally need in data quality. Next to this, the general principle of developing frameworks that allow for different conformance/quality levels, possibly specific to domains, looks promising. It would enable encouraging gradual enhancement of the data, where it matters most, and better sharing of best practices for applying EDM in different type of objects.

From here, many specific features of good quality data were discussed, but none agreed upon more widely than the ‘link density’ of the data. Europeana needs to keep encouraging the cultural sector to better link their data. We need tools to enable source institutions to enrich their data with good links. Doing this with automated enrichment tools one step away from the source, such as tools like MORe applied by a data aggregator, can never match this quality. Some might even claim it cannot be refered to as "enrichment", when it results in adding errors to the original metadata.

Of course, enrichment tools are still useful if links are not created at the source. But in such cases, existing tools should align better with Europeana, in terms of the format produced, the targets for enrichment, and the general focus on quality and performance

Finally, there were some bold statements about promoting a more aggressive stance to accepting content in Europeana. For cases where data quality is too poor, such as general lack of language information on the digitized object and the data that describes it, Europeana should sometimes consider simply saying ‘no thanks’.

This problem aligns with that of relevance - sometimes Europeana ingests content (digitized objects) that are unwanted. It is generally impossible to decide how users define cultural heritage: one man's gold is another man's junk, as the saying goes, and vice versa.

However, some junk is clearly less valuable than other; consider, for instance, individual Yellow Pages perfectly OCRed; scientific articles, individual hand-written census pages, thousands of shards from an archeology data service. These are clearly important for specialized services but may be less relevant for a general purpose service like Europeana, especially when one considers that in a distributed environment the specialized services could still be reached via the more general one.

Wanted: EDM extensions!

The core of EDM has remained stable over the years. However, in order to accommodate more specific requirements from the community using it, extensions have been developed for representing various levels of data. During the workshop, participants expressed their interests in two particular requirements.

First, the participants came back to an important question that has been raised repeatedly since the creation of Europeana: how should Europeana integrate non-digital objects in its services and how can EDM be used to represent them? As a digital library, Europeana has made the choice to only accept descriptions of CH objects with digital representations. This choice as a content strategy made sense in the early age of Europeana as large digitisation projects had been initiated throughout Europe. However, the slow-down of those programmes as well as the reality of the digitisation landscape (only 10% of the CH content digitised according to the Enumerate), raises the issue.

The gaps in terms of digitisation affect the representation of CHO objects in Europeana. This problem has been looked at in details in the Report on EDM for hierarchical objects. While the model can theoretically represent all levels of a complex CH object, the absence of a digital object means that some levels containing key descriptive information are omitted. Collection-level information is important in this context as it can provide metadata about CH objects that have either disappeared, or are not digitised. The workshop participants insisted on the importance for EDM to represent non-digital objects if Europeana wants to provide a complete overview of the cultural heritage landscape.

“EDM for sound recordings made us think of better connecting bibliographic data to digital objects metadata. Anila Angjeli, BnF”

The second requirement regards the representation of “Events” in EDM. As pointed out by the participants, this requirement isn't new for Europeana, as the need for this entity has been expressed many times! The participants asked why an Event class is still not implemented in EDM. It is true that we have not implemented an Event class in the EDM we use for data aggregation, however the class has been defined in the EDM Definitions and could be re-used in other applications profiles. The participants acknowledged that Events data are difficult to identify in an aggregation environment: firstly, because they are many definitions of what an event is; and secondly because there is no way of uniquely identifying events. Defining Event as an entity in EDM would imply that the resource is identified by a persistent URI. Yet apart from the recent work from Wikidata,there is no authority for reference Event URIs.

“EDM=COOL but ...could you make it even cooler by adding events and roles ?(...)”

To clarify their requirements the participants came back to the definition of Event. Generally, they agreed that Events have to considered as enablers for representing nuanced data about the lifecycle of a CH object such as its creation, finding or publication.

The discussion on Events is tied to the notion of Roles. The representation of roles for agents within an event seemed to be one of the most important use cases, which pushed some participants to ask whether the real need was for Role instead of Event. The participants concluded that the requirements for Events should be more detailed and data examples should be collected. The analysis of the current EDM and its comparison with other models such as CIDOC-CRM will be relevant only when this first collection step is complete (via the creation of a new EuropeanaTech Task Force for instance).

“Implement Events & Role BUT get organisations to do the work (...) . Gordon Mc Kenna”

As we hope you’ve found from reading these notes, the workshop was hugely fruitful and has provided Europeana with lots to work on in the coming months. We thank all those who attended, and shared their thoughts, feedback and expertise.

=========

[1] Lizzy Jongma’ s demonstration was based on a search query for the painting “The Night Watch” (http://www.europeana.eu/portal/record/90402/SK_C_5.html ). She showed how difficult it is to find the masterpiece in Europeana and how the display was confusing for the user.

[2] Marko Knepper’s presentation available at http://pro.europeana.eu/files/Europeana_Professional/EuropeanaTech/EDM_workshop_112015/EDM_PerformingArts_MKnepper.pdf